Parquet Data - Big Data File Formats Blog Luminousmen : We have a list of boolean values say 0,0,0,0,0,0,0,0,1,1,1,1,1,1,1,1 (0 = false and 1 = true) this will get encoded to 1000,0000,1000,0001 where 1000 => 8 which is number of occurences of.

Parquet Data - Big Data File Formats Blog Luminousmen : We have a list of boolean values say 0,0,0,0,0,0,0,0,1,1,1,1,1,1,1,1 (0 = false and 1 = true) this will get encoded to 1000,0000,1000,0001 where 1000 => 8 which is number of occurences of.. Create a connection string using the required connection properties. Connecting to parquet data connecting to parquet data looks just like connecting to any relational data source. This page provides an overview of loading parquet data from cloud storage into bigquery. We created parquet to make the advantages of compressed, efficient columnar data representation available to any project in the. Parquet 1.9.0 data in apache parquet files is written against specific schema.

All data types should indicate the data format traits but can. We have a list of boolean values say 0,0,0,0,0,0,0,0,1,1,1,1,1,1,1,1 (0 = false and 1 = true) this will get encoded to 1000,0000,1000,0001 where 1000 => 8 which is number of occurences of. Parquet operates well with complex data in large volumes.it is known for its both performant data compression and its ability to handle a wide variety of encoding types. Parquet is a binary format and allows encoded data types. Parquet files are most commonly compressed with the snappy compression algorithm.

We believe this approach is superior to simple flattening of nested name spaces.

The parquet format's logicaltype stores the type annotation. Parquet metadata is encoded using apache thrift. Parquet files maintain the schema along with the data hence it is used to process a structured file. It maintains the schema along with the data making the data more structured to be read and process. Parquet is built from the ground up with complex nested data structures in mind, and uses the record shredding and assembly algorithm described in the dremel paper. Map, list, struct) are currently supported only in data flows, not in copy activity. Parquet is built to support very efficient compression and encoding schemes. Parquet files are most commonly compressed with the snappy compression algorithm. There were not only some simple log files, but also data that i had to convert into a slowly changing dimension type 2. All data types should indicate the data format traits but can. Parquet videos (more presentations) 0605 efficient data storage for analytics with parquet 2 0 The same columns are stored together in each row group: This connector was released in november 2020.

Parquet is an open source file format available to any project in the hadoop ecosystem. Apache parquet defines itself as: Both formats are natively used in the apache ecosystem, for instance in hadoop and spark. The same columns are stored together in each row group: Each attribute in common data model entities can be associated with a single data type.

Apache parquet data organized by a data source.

To use complex types in data flows, do not import the file schema in the dataset, leaving schema blank in the dataset. Pyspark sql provides methods to read parquet file into dataframe and write dataframe to parquet files, parquet() function from dataframereader and dataframewriter are used to read from and write/create a parquet file respectively. This encoding uses a combination of run length + bit packing encoding to store data more efficiently. Unlike some formats, it is possible to store data with a specific type of boolean, numeric (int32, int64, int96, float, double) and byte array. There is an older representation of the logical type annotations called convertedtype. Then, in the source transformation, import the projection. In order to illustrate how it works, i provided some files to be used in an azure storage. This page provides an overview of loading parquet data from cloud storage into bigquery. This results in a file that is optimized for query performance and minimizing i/o. We believe this approach is superior to simple flattening of nested name spaces. Our drivers and adapters provide straightforward access to parquet data from popular applications like biztalk, mulesoft, sql ssis, microsoft flow, power apps, talend, and many more. When you load parquet data from cloud storage, you can load the data into a new table or partition, or you can append to or overwrite an existing table or partition. We have a list of boolean values say 0,0,0,0,0,0,0,0,1,1,1,1,1,1,1,1 (0 = false and 1 = true) this will get encoded to 1000,0000,1000,0001 where 1000 => 8 which is number of occurences of.

Parquet is built to support very efficient compression and encoding schemes. We have a list of boolean values say 0,0,0,0,0,0,0,0,1,1,1,1,1,1,1,1 (0 = false and 1 = true) this will get encoded to 1000,0000,1000,0001 where 1000 => 8 which is number of occurences of. Parquet format also defines logical types that can be used to store data, by specifying how the primitive types should be interpreted. Parquet 1.9.0 data in apache parquet files is written against specific schema. The parquet format's logicaltype stores the type annotation.

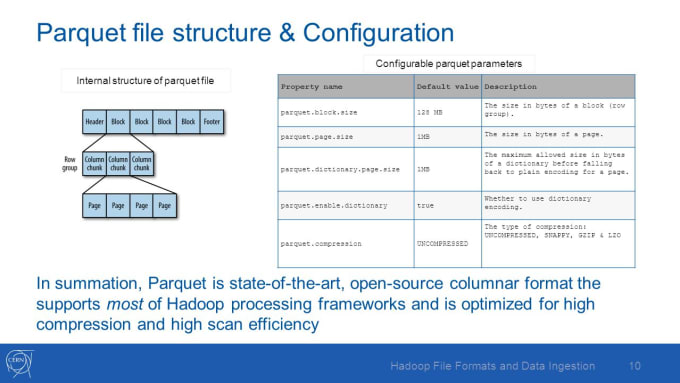

Apache parquet is a columnar file format that provides optimizations to speed up queries and is a far more efficient file format than csv or json.

Connecting to parquet data connecting to parquet data looks just like connecting to any relational data source. And who tells schema, invokes automatically data types for the fields composing this schema. This page provides an overview of loading parquet data from cloud storage into bigquery. All data types should indicate the data format traits but can. Parquet 1.9.0 data in apache parquet files is written against specific schema. The drawback again is that the transport files must be expanded into individual avro and parquet files (41% and 66%). Parquet operates well with complex data in large volumes.it is known for its both performant data compression and its ability to handle a wide variety of encoding types. A columnar storage format available to any project in the hadoop ecosystem, regardless of the choice of data processing framework, data model or programming. Parquet complex data types (e.g. Parquet files maintain the schema along with the data hence it is used to process a structured file. For further information, see parquet files. Parquet is a columnar format that is supported by many other data processing systems. This allows clients to easily and efficiently serialise and deserialise the data when reading and writing to parquet format.

Apache parquet apache parquet is a columnar storage format available to any project in the hadoop ecosystem, regardless of the choice of data processing framework, data model or programming language parquet. This page provides an overview of loading parquet data from cloud storage into bigquery.